Abstract

3D Gaussian Splatting (3DGS) has demonstrated impressive novel view synthesis results while advancing real-time rendering performance. However, it relies heavily on the quality of the initial point cloud, resulting in blurring and needle-like artifacts in areas with insufficient initializing points. This is mainly attributed to the point cloud growth condition in 3DGS that only considers the average gradient magnitude of points from observable views, thereby failing to grow for large Gaussians that are observable for many viewpoints while many of them are only covered in the boundaries. To this end, we propose a novel method, named Pixel-GS, to take into account the number of pixels covered by the Gaussian in each view during the computation of the growth condition. We regard the covered pixel numbers as the weights to dynamically average the gradients from different views, such that the growth of large Gaussians can be prompted. As a result, points within the areas with insufficient initializing points can be grown more effectively, leading to a more accurate and detailed reconstruction. Besides, we also propose a simple yet effective strategy to scale the gradient field according to the distance to the camera, to suppress the growth of floaters near the camera. Extensive experiments both qualitatively and quantitatively demonstrate that our method achieves state-of-the-art rendering quality while maintaining real-time rendering speed, on the challenging Mip-NeRF 360 and Tanks & Temples datasets.

Method

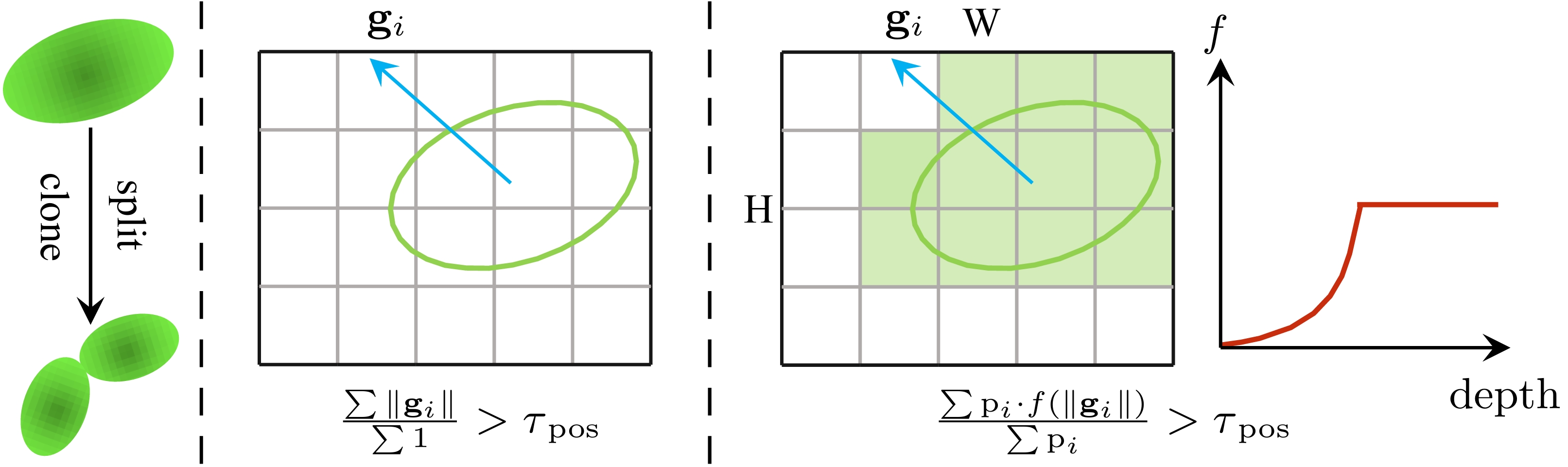

Pipeline of Pixel-GS. \( \mathrm{p}_i \) represents the number of pixels participating in the calculation for the Gaussian from this viewpoint, and \( \mathbf{g}_i \) represents the gradient of the Gaussian's NDC coordinates. We changed the condition for deciding whether a Gaussian should split or clone from the left to the right side.

Results

Comparison wtih 3DGS

Here, we present the qualitative comparison results of 3DGS*, Pixel-GS (Ours), and Ground Truth.

Drop a certain proportion of the initial point cloud.

Randomly discard a certain proportion of the SFM point cloud points for scene initialization. The figure below shows the comparison of the reconstruction results for the bicycle scene from the Mip-NeRF 360 dataset between 3DGS, Pixel-GS (Ours) and Ground Truth.

BibTeX

@inproceedings{zhang2024pixelgs,

title = {Pixel-GS: Density Control with Pixel-aware Gradient for 3D Gaussian Splatting},

author = {Zhang, Zheng and Hu, Wenbo and Lao, Yixing and He, Tong and Zhao, Hengshuang},

booktitle = {ECCV},

year = {2024}

}